|

Jungbin Cho I am a first-year Master’s student at Yonsei University, advised by Professor Yu in MIR Lab. I received my B.S. in Electrical and Electronic Engineering from Yonsei University as well. |

|

News

|

ResearchI am interested in building realistic virtual environments and digital avatars that can interact with humans in intuitive and natural ways. Toward this goal, I develop and refine methods in computer vision, computer graphics, and generative modeling. Recently, my work has focused on 3D human motion generation and understanding how humans interact with their surrounding scenes. |

|

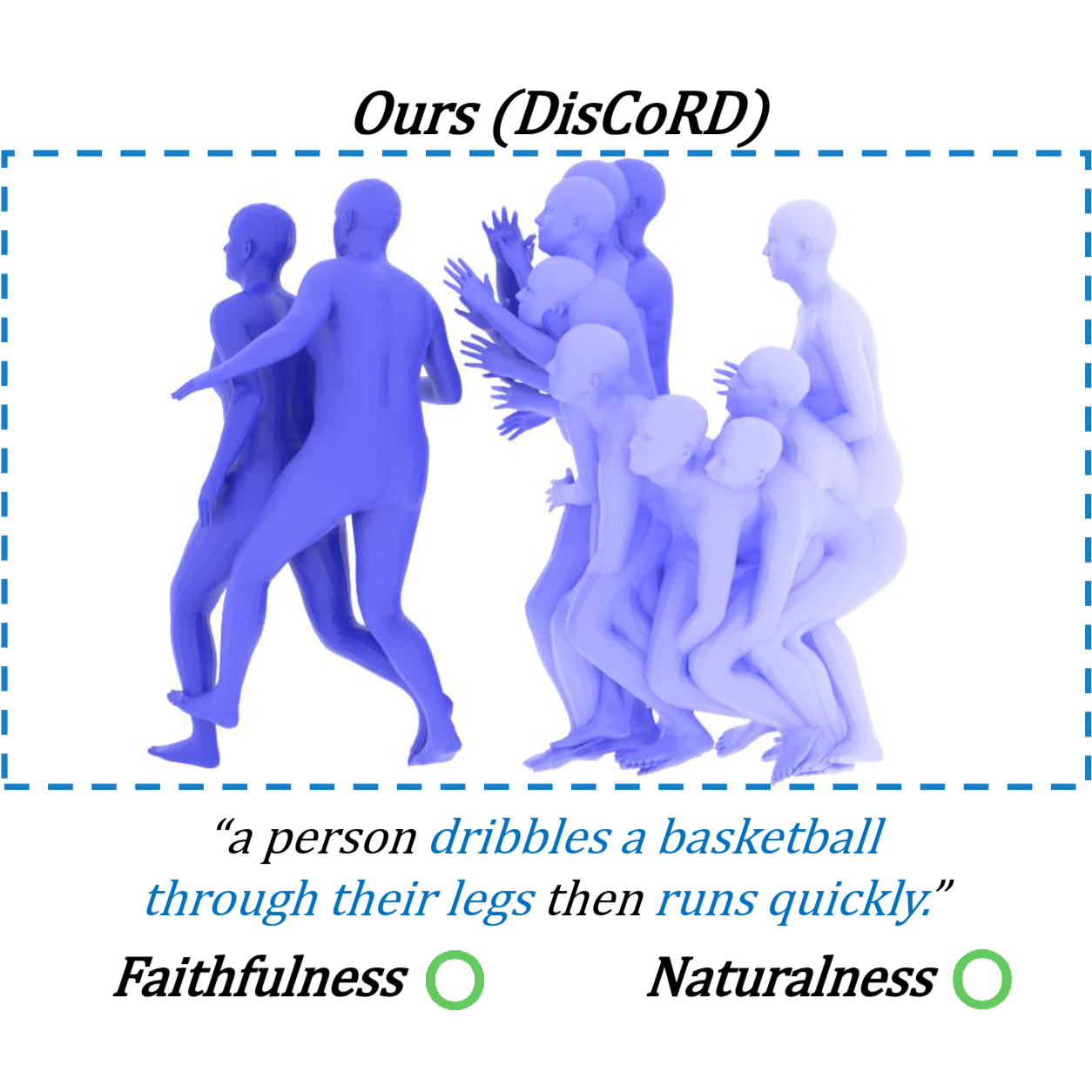

DisCoRD: Discrete Tokens to Continuous Motion via Rectified Flow Decoding

Jungbin Cho*, Junwan Kim*, Jisoo Kim, Minseo Kim, Mingu Kang, Sungeun Hong, Tae-Hyun Oh, Youngjae Yu ICCV 2025 (Highlight) Generating smooth and natural motion by decoding discrete motion tokens with rectified flow. |

|

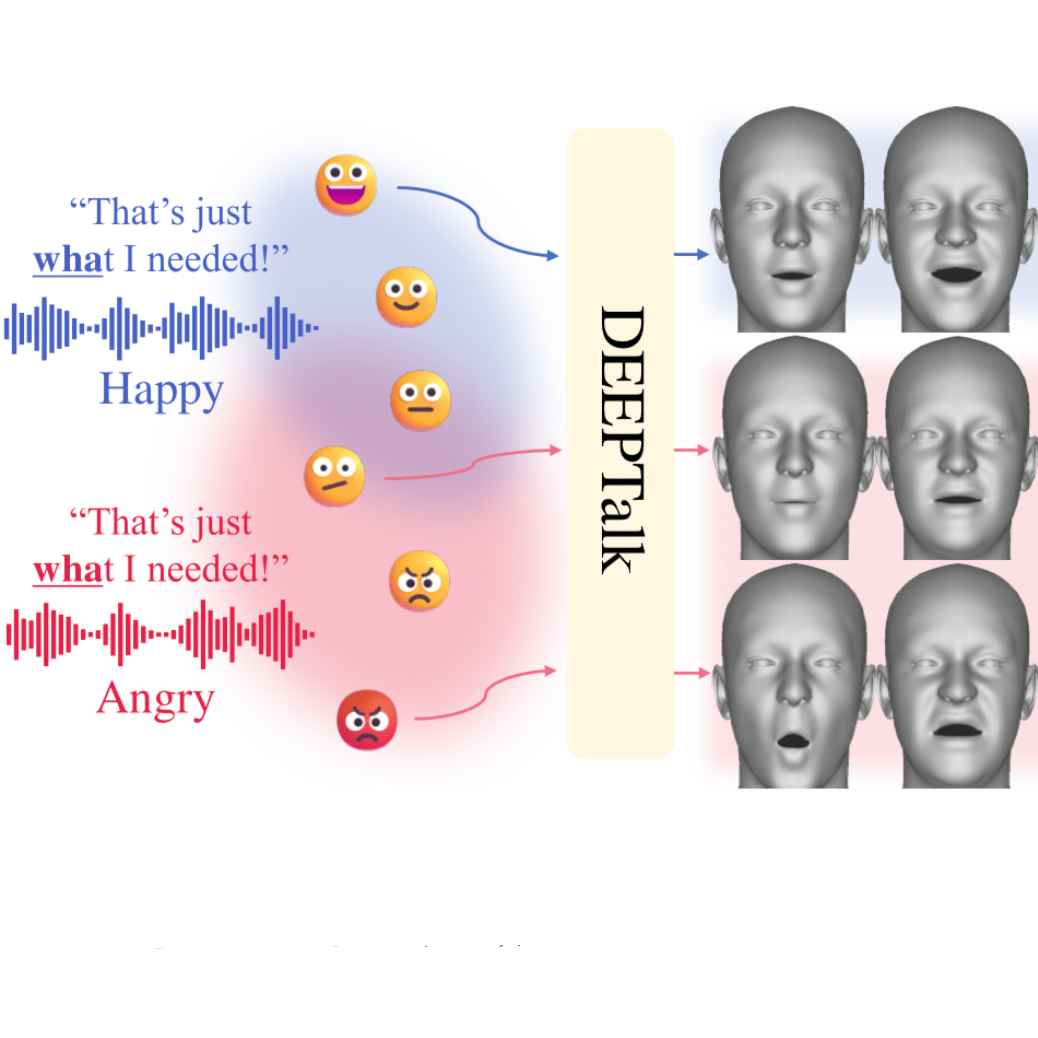

DEEPTalk: Dynamic Emotion Embedding for Probabilistic Speech-Driven 3D Face Animation

Jisoo Kim*, Jungbin Cho*, Joonho Park, Soonmin Hwang, Da Eun Kim, Geon Kim, Youngjae Yu AAAI 2025 Generating dynamic emotional talking faces using probabilistic embeddings and a temporally hierarchical motion tokenizer. |

|

EgoSpeak: Learning When to Speak for Egocentric Conversational Agents in the Wild

Junhyeok Kim, Minsoo Kim, Jiwan Chung, Jungbin Cho, Jisoo Kim, Sungwoong Kim, Gyeongbo Sim, Youngjae Yu NAACL Findings 2025 Detecting when to speak. |

|

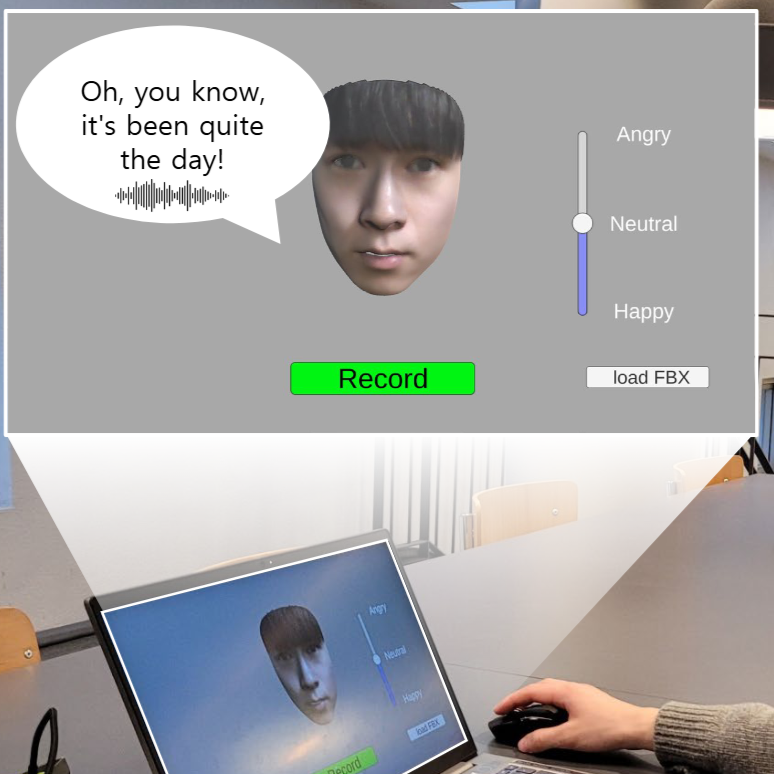

AVIN-Chat: An Audio-Visual Interactive Chatbot System with Emotional State Tuning

Chanhyuk Park*, Jungbin Cho*, Junwan Kim*, Seongmin Lee, Jungsu Kim, Sanghoon Lee IJCAI Demo 2024 A chatbot system designed for face-to-face interactions, featuring customizable virtual avatars for personalized conversations. |

|

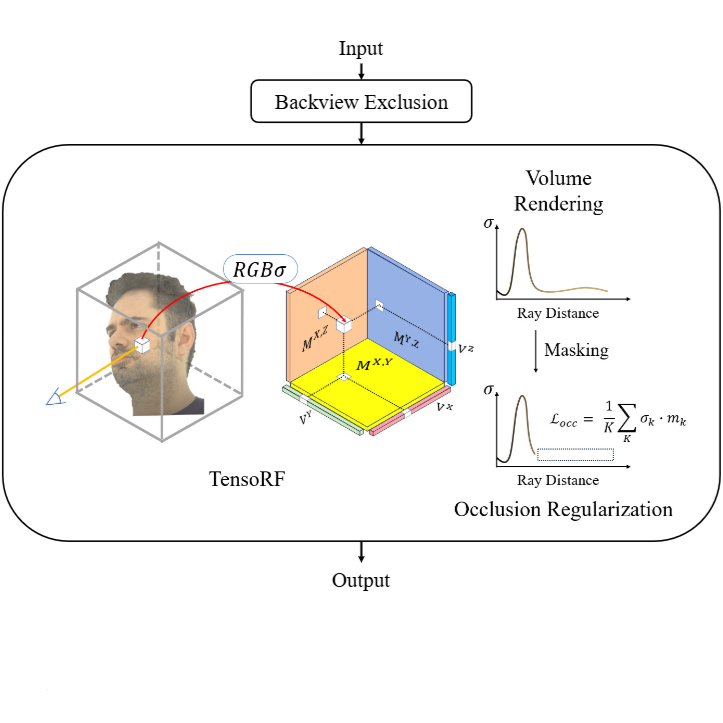

VSCHH 2023: A Benchmark for the View Synthesis Challenge of Human Heads

Youngkyoon Jang, ..., Hyeseong Kim, Jungbin Cho, Dosik Hwang, ..., Stefanos Zafeiriou ICCV Workshop 2023 Reconstructing high-resolution 3D human heads from sparse input views. |

|

|